Blog

Machine Translation Report

What is the optimal MT Engine for you? Find out in the latest MT Report by Memsource.

Predicting quality isn’t only about machine translation but also the human post-editors who work with it: a deep dive on Memsource’s MT Quality Estimation feature.

Over the past decade, machine translation (MT) has matured into an impressive technology that is capable of meeting and often exceeding the user’s quality expectations. Nevertheless, mistakes still happen, and for many people, the occasionally unpredictable quality of MT may seem challenging to manage.

Fortunately, Memsource has resolved this difficulty through its MT Quality Estimation feature. At the 2021 MT Summit, Aleš Tamchyna, AI Research and Development Manager at Memsource, introduced the feature that dynamically uses past performance data to instantly predict the quality of MT output.

Based on that retrospective, this deep dive will unravel the reasoning behind the MT Quality Estimation feature and let you know why predicting quality isn’t only about machine translation but also the human post-editors who work with it.

What is MT Quality Estimation?

Whenever a segment is translated using machine translation in Memsource, our MT Quality Estimation algorithm will assign it one of the following four scores:

- 100 = perfect

- 99 = near perfect

- 75 = high quality

- 0 = low quality

The scores are generated using past performance data. The AI algorithm looks at the machine translation post-editing required for comparable segments and generates an estimate of how much post-editing it thinks will be required. These scores are visible next to individual segments in the CAT tool, and cumulatively for project managers, helping guide not just post-editing, but also big-picture project planning.

Use cases for MT Quality Estimation

What can MT Quality Estimation be used for?

- Predict overall savings with MT before manual translations. You can run it on documents to estimate how much effort will be required by editors. This can help businesses anticipate translation costs and language service providers offer more reliable price estimates.

- Speed up post-editing with scores that are displayed in the editor. Linguists can use them to decide whether they will start from scratch or selectively post-edit the output.

- Avoid post-editing, high-quality MT. Translation workflows can be set to automatically skip the manual post-editing step if the quality is deemed to be sufficiently good. In some cases it can go straight to review—in others, it can be displayed straight to customers.

- Calculating linguist compensation. MT Quality Estimation can be used to create pricing models for post-editing that reflect the actual effort required to post-edit MT. Although many Memsource customers successfully leverage MT Quality Estimation to create pricing models, it is still an open topic that each business has to carefully consider. To learn more about the intricacies of pricing post-editing, read about the Pricing Models for MTPE workshop hosted by Memsource.

The challenges of development: not just an academic question

MT Quality Estimation recently celebrated its 3rd birthday. When it was launched in 2018, it supported only a limited number of language pairs and there was a lot of room for improvement when it came to the quality of the prediction. Today, the algorithm evaluates approximately 10 million post-edited segments in a month. Thanks to its use of AI technology, every single one of those segments contributes to improving the quality of future predictions. It also helps provide support for more language pairs, which now exceeds 130 pairs.

There is a lot of discussion about quality estimation metrics in the academic space. For our AI team, the challenge was to take all the academic insight and adapt it into a usable model for our customers. Bridging the gap between academia and business can be a challenging process.

The challenges were notable: In an enterprise-grade TMS like Memsource, the sheer volume of translation and the variety of domains handled exceeds anything considered in more carefully-defined academic studies. Additionally, the desired outcomes can be different. In many studies, the goal is usually to achieve the best possible translations. In the real world, some customers are satisfied with translations that are accurate and timely, if not always elegant.

What affects MT quality estimation scores?

In the past three years, Memsource’s AI team has discovered three main factors that influence post-editing:

- The customer—expectations of how good the MT should be and how much the customer is willing to spend: is a workflow able to justify full post-editing or is light post-editing enough?

- The domain—the specific nature of the document can affect both the performance of the MT engine and the amount of post-editing that is expected. If you are translating low-value user support content, you might be satisfied with occasional mistakes. If you’re translating legal documents, every character can matter.

- The post-editor—each post-editor approaches machine translation differently, some may under-edit, while others might instinctively distrust the output and over-edit instead.

When it comes to the first two factors, Memsource does a lot to help mitigate them. The MT management tools in the Memsource Translate Add-on empower the customer to make sure their expectations are always met, while the Autoselect feature automatically recommends the best performing engine for every language pair. The remaining open challenge is the individual linguist.

The human factor in MT Quality Estimation

MT Quality Estimation has to predict not just the quality of MT, but also the behavior of the post-editor. This is important since sores ultimately rely on the real post-editing done by linguists in the Memsource platform. However, each linguist is very different, and their response to any given segment can vary quite significantly: It can be anything from confirmation of the MT output to a comprehensive re-write of something the engine produced.

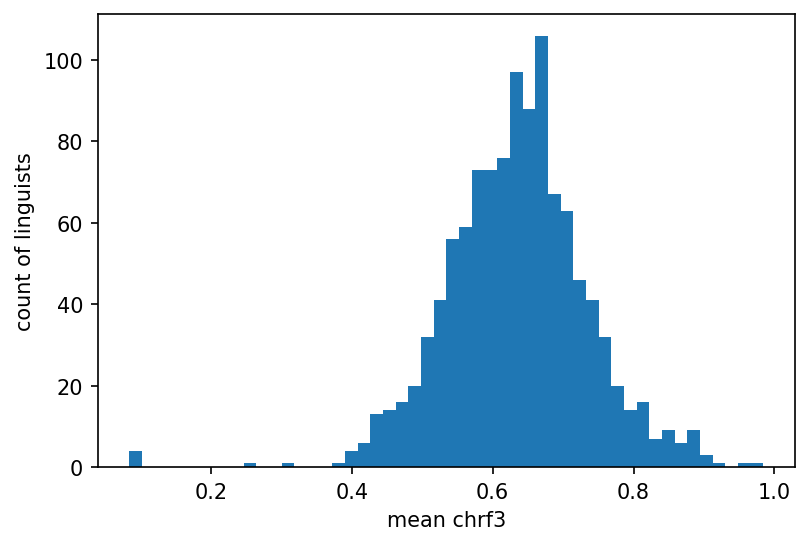

MT Quality Estimation works around this by considering averages, instead of each individual linguist. Although you may see significant variation in how each segment is post-edited, cumulatively you will see a trend that resembles a bell curve, as can be seen in the graph below.

MT Quality Estimation uses this to generate a score that is most likely to represent the response of the average linguist, but in practice, it can mean that occasionally the post-editor might disagree with the assigned score.

One important conclusion from this is that a perfect MT Quality Estimation score is essentially impossible to achieve since it ultimately depends on the unpredictable character of the individual linguist.

Is there anything that can be done with this? Memsource’s AI team has experimented with the possibility of refining the algorithm to consider one additional factor: the post-editing preferences of each individual linguist.

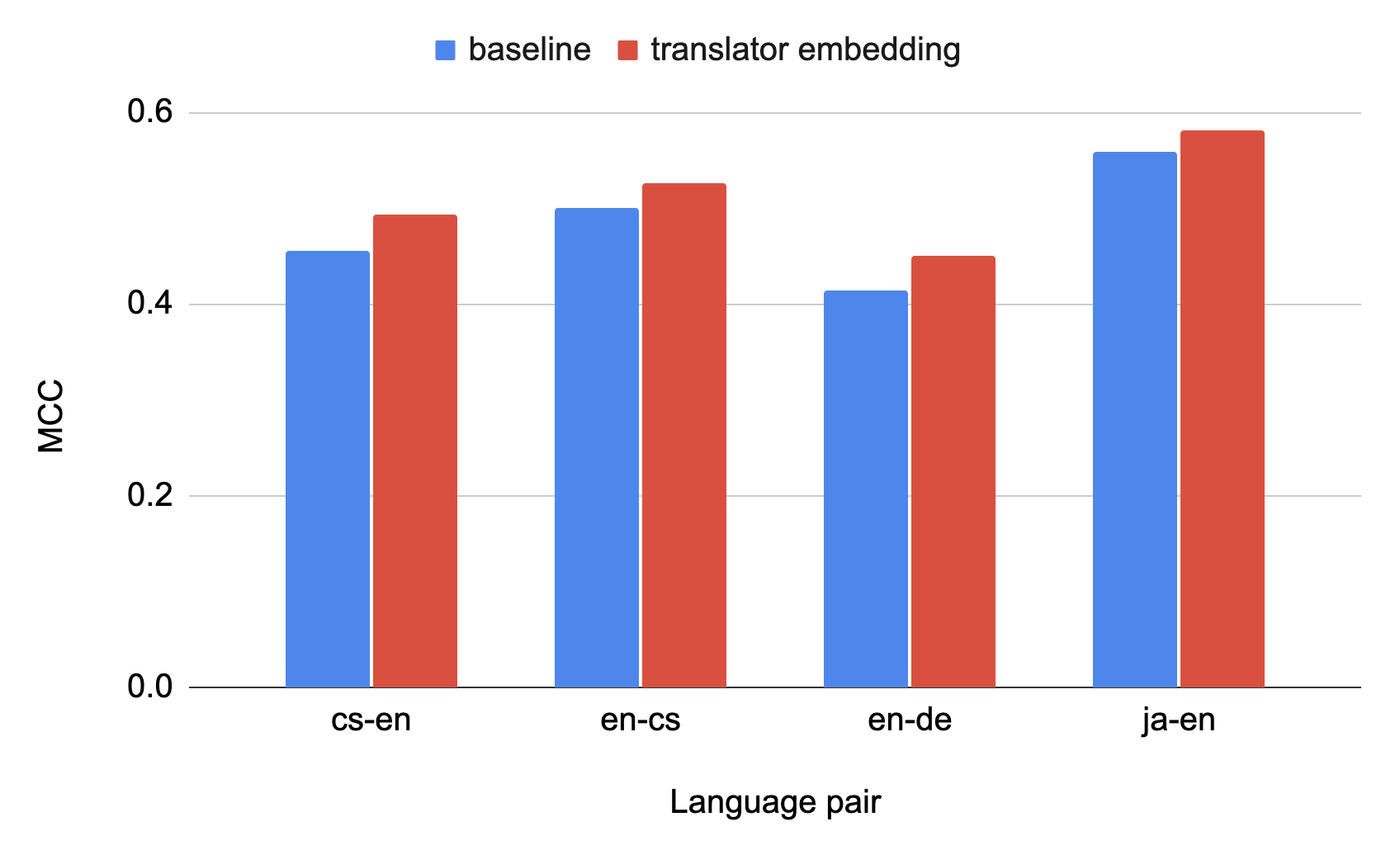

Memsource’s AI team calls this experiment “translator embedding”. It assigns each translator a unique identity and then tracks the amount of post-editing they do for each given segment. Over time, it will be possible to anticipate whether this post-editor is likely to over or under-edit a given segment in a specific language pair from a given MT provider.

This information can then be considered by the MT Quality Estimation algorithm so that it can generate a score that is custom-made for each linguist. The following chart shows that the accuracy of the predictions increases with translator embedding:

Although this experiment shows positive improvements in the quality of prediction, it is not currently a part of the MT Quality Estimation feature. The reason why is once again the problem of unpredictability: Currently, there is no way to know the point when the scores are generated or which linguist will be editing a given segment.

Translator embedding represents one of the many possible directions that MT Quality Estimation can take. However, the future of MT Quality Estimation is closely tied to the development of MT technology in general. As the quality of MT output continues to improve, it may one day reach levels where it will no longer be necessary to predict or even think about quality being an uncertain variable.